Amplifying User Intelligence with Chatbot Feedback Loops

This post was originally published on chatbotsmagazine.com.

So you’ve just set up your chatbot, and it’s working well — congrats! Now for the bad news: if you don’t set up a feedback loop right away, your chatbot could already be falling short of its potential. But what does a great chatbot feedback loop look like, and how can you harness it to deliver more value to users? In my work with companies adopting chatbots, feedback loops are consistently one of the most overlooked components of the system. This is a shame, because they don’t have to be complicated in order to add value. Let’s take a closer look and demystify feedback loops: why you need them, what types of feedback to collect, and how to turn that feedback into continuous improvement that will distance you from your competition.

Why Feedback Loops are Crucial to Chatbots

So why does your chatbot need a feedback loop, anyway? Without one, you’re limiting the intelligence that you could be getting back from your users, and missing an easy opportunity to improve by adapting to user needs. And if you want to build a chatbot that gets rave reviews time after time, users’ opinions matter. Let’s start by identifying the main ways that feedback loops can help you provide a great experience for your users.

- Understand your users. They’re a way to understand what your users really want. Because chatbots are inherently open-ended, your users will say things that you never anticipated. Looking at what people are actually saying lets you figure out how your chatbot should handle these unanticipated conversational turns. For example, you might have overlooked some common ways that users ask a bot for help. Or users might enjoy your chatbot so much, they start looking for it to do additional things.

- Keep it fresh. They help you keep your chatbot current. Left to itself, even an amazing chatbot will gradually decay. By looking at failure cases, you’ll be able to catch the big changes and avoid one of the most common chatbot pitfalls . You’ll have to build in new catchphrases, hot topics, and product names to keep your chatbot up-to-date.

- Adapt to evolving needs. They allow you to meet user expectations. Although great chatbots manage user expectations, people will naturally have higher expectations for conversational than non-conversational interfaces. This makes it even more important for chatbots to continuously improve through feedback.

How to Collect Chatbot Feedback

Convinced that feedback loops could add value to your chatbot? Now we’ll dive into what we actually mean when we say “feedback.” Let’s review some of the most common sources here.

In-conversation feedback sources

Except for some edge cases, you’ll generally be logging the complete conversation between your bot and user. This is an amazing source of feedback for how your interactions are going, and my top choice for bots that are early in development.

Just take a sample of conversations or turns and hand-score them according to your own success criteria. If you’re not sure what those are, here’s a few sample questions you could consider using (on a 0–5 or 0–3 scale). If you’re looking at turns, consider:

- “Did my chatbot understand the user’s intent?”

- “Was the chatbot’s response appropriate to the user’s query?”

If you’re looking at entire conversations at a time, you might ask:

- “Am I proud of my chatbot’s performance in this conversation?”

- “Did the user ultimately accomplish at least one goal of the conversation?”

Unless your answer to the above questions is a definitive “yes!”, keep track of the reasons that are holding you back. Some common issues are missing intents, bad handling of failure cases, or repetitive or unnatural dialogue. This is the quickest way to help you really understand what’s going on, and you should do it regularly, especially early on.

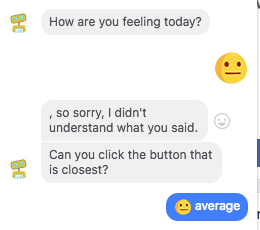

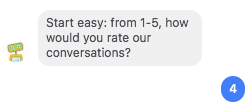

While the above method is extremely useful, you might eventually also want to try something that takes a little less effort on your part. You can ask the user in the flow of conversation to rate the conversation. For instance, here’s how Woebot does it:

Pretty simple, right? Not only can this number be useful to track over time, but you can ask the user to elaborate. Most of the time, this is pretty natural in the flow of conversation, so uptake is higher than other methods of asking for feedback.

Additionally, you might keep track of quantitative statistics about each conversation or user, such as conversation length, number of times a user returns to use the chatbot, or the percentage of queries for which your chatbot has to enter an “I don’t know” flow.

Just make sure that your metrics are tailored to your use case. For instance, if you’re running a customer service chatbot, the ideal might be a short but efficient conversation, while for a social or gaming chatbot, it might be longer conversations that are optimized for engagement.

Whichever metrics you choose, I always recommend combining this with qualitative rating of sample conversations to make sure you’re interpreting the numbers correctly.

External feedback sources

Depending on the purpose of your bot, at a later stage, some of your most valuable feedback might come from data outside the chatbot itself. For instance, you might assess a customer service chatbot by seeing whether users that talk to it churn at a higher or lower rate than others. A chatbot that supplies product information might be graded on whether users ultimately make a purchase.

Just be careful when designing these metrics that they’re not picking up on other things. For example, chatbot users might differ from your other users in being more tech-savvy, which could account for differences in behavior.

If you’re building chatbots as part of a suite of products or channels, don’t ignore your standard product feedback sources. This includes support emails, usability testing, social channels and whatever else you’re using. You’ll get some useful insights comparing chatbots to other channels and see customer feedback in a broad way.

How Do I Turn My Feedback into Improvements

So now that you’ve got your feedback, how do you actually close the loop so that you can reap the benefits of continuous improvement? There are two main ways of doing this:

Manual, human-led improvements

A lot of what I’ve talked about above falls into this category. You take a look at your feedback data, come to an understanding of what’s going wrong and why, and prioritize fixes or improvements accordingly.

For instance, you might choose to add new intents to increase your coverage on user queries. Or you might tweak your onboarding to set expectations around what your bot can do.

Once you roll these fixes out, you can check in on the same feedback sources to validate that you’ve made a change for the better.

Semi-automated improvements

If you have a more sophisticated chatbot that’s using non-trivial machine learning, you have more options at your disposal.

As you generate more and more conversational data, you can use this data to retrain the underlying machine learning models. In most cases, the more data you have, the better the accuracy and coverage you get.

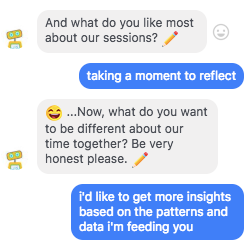

Another strategy is to ask users to teach you things when you fail, and then update your models accordingly. Going back to Woebot, here’s an interaction where it learns more about the meaning of a previously unknown emoticon:

You can then review the results of interactions like those and update your underlying language models to increase coverage.

Although it’s technically feasible for nearly all use cases, I don’t recommend fully automating model updates, because you risk losing control of the direction of your chatbot.

By now we’re all familiar with the cautionary tale of Tay, which turned from a fun chatbot to a deeply offensive PR problem in under a day.

In general, you should roll out model updates slowly and test them with a small subset of users first before going live to your entire user base, especially for more well-established chatbots. On the other hand, don’t wait too long to update your models, or you’ll risk having your chatbot go out of date.

Is My Feedback Loop Working?

So now that you’ve got a feedback loop going, how do you know it’s actually working? Hopefully, you’re getting a lot of great improvement ideas by analyzing your conversations. But it can still be worthwhile to set metrics for success here. Here are a few of my favorite ways of assessing your chatbot feedback loop:

Plotting bot success metrics — before and after the feedback loop.

If your chatbot already uses clearly defined success metrics, be sure to benchmark them before setting up your feedback loop or making any changes to how it runs. This will help you keep an eye out for unintended consequences, especially if you’re using a semi-automated feedback loop.

Measuring intent coverage over time.

The two most frustrating chatbot experiences are misunderstanding your user, and having to repeatedly tell them you don’t understand what they’re asking. Periodically taking a random sample of conversational turns and assessing whether the chatbot mapped the user’s message to the correct intent will tell you if your feedback loop is increasing intent coverage over time.

Developing a measure of momentum

Once a feedback loop is established, performance metrics are defined, and you’ve had the chance to collect data during a few rounds of improvement, it will be possible to begin to really understand how your bot is performing. Evaluate how the metrics are changing over time, and analyze performance data to identify additional opportunities for improvement.

Embrace Feedback Loops for Competitive Advantage

As chatbot technology and conversational AI toolsets become increasingly mature, your users will have higher expectations, and you’ll have to work to set yourself apart from your competition.

Getting a feedback loop going — even in a small way — will allow you to adapt to changing circumstances. This should improve user experience and the value your chatbot provides.

Read more like this

Testing LLMs for trust and safety

We all get a few chuckles when autocorrect gets something wrong, but…

Introducing Georgian’s “Crawl, Walk, Run” Framework for Adopting Generative AI

Since its founding in 2008, Georgian has conducted diligence on hundreds of…